About LEXIS Platform

The LEXIS platform enables easy access to distributed High-Performance-Computing (HPC) and Cloud-Computing infrastructure for science, SMEs and industry. It enables automatised workflows across Cloud and HPC systems, on site or across different European supercomputing centres.

On-line service for easy access to HPC and data-driven workflows

HPC-as-a-Service approach

Embedding of HPC and Cloud-Computing systems

Distributed compute and storage resources available through connected providers

Non-intrusive integration components for HPC using only userspace

Management of complex computing workflows

Declarative workflows

Workflow orchestration with automated data transfers

Multi-tenant service with RBAC user access management

User-space integration focused on non-intrusivity

Distributed data management

Data management on object storages (iRODS)

Direct data transfers to resource providers

Staging between HPC parallel filesystem and external resources

FAIR features, incl. Data Cite metadata, PID support

Data access through web based GUIs and APIs

Focus on maintaining autonomy of resource providers

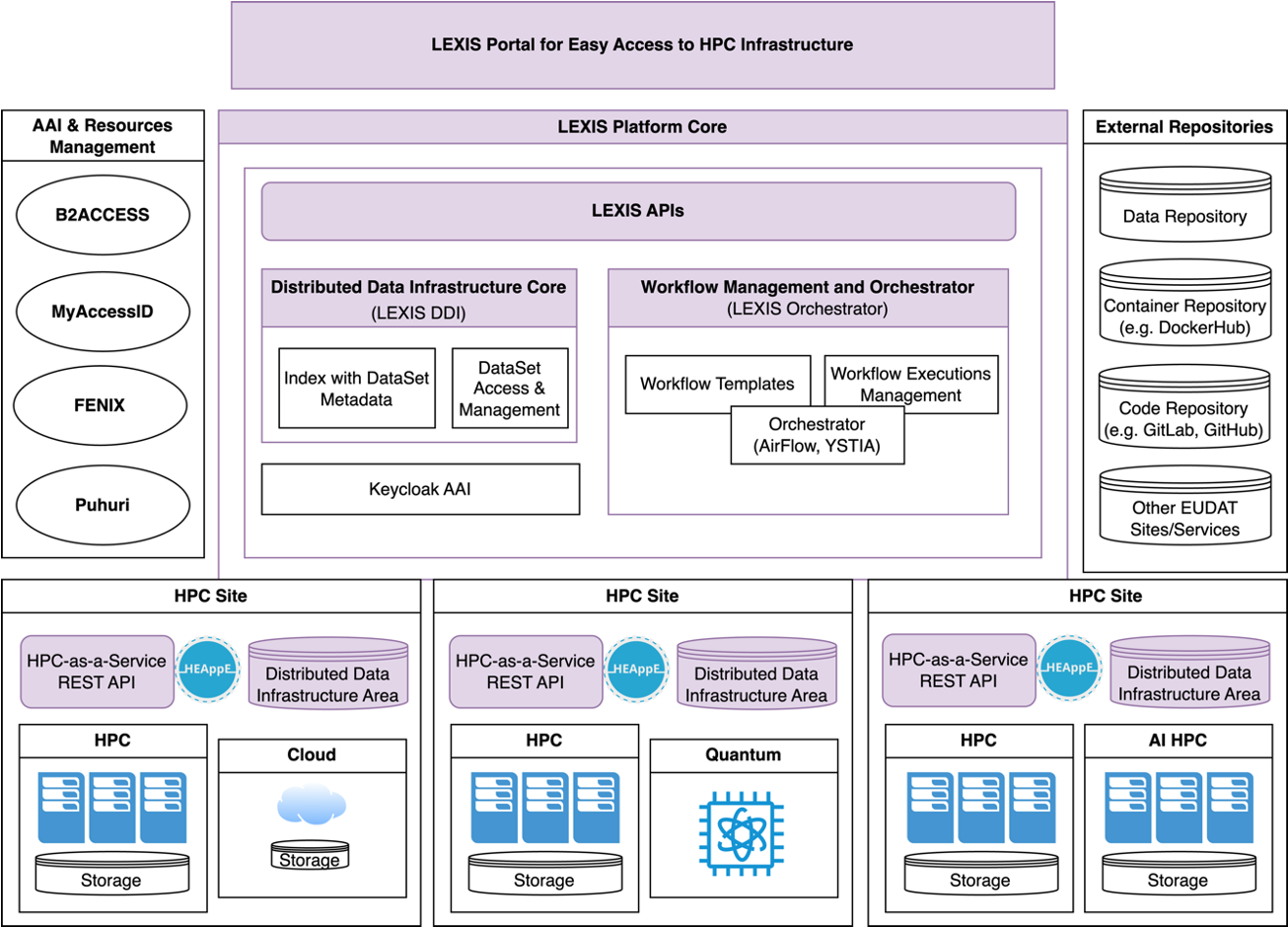

Architecture

The platform architecture is service oriented, grouped to three layers:

The user interfaces like the LEXIS Portal web GUI, well specified APIs along with wrappers for Python and R

The core platform operating the AAI backend components, the workflow orchestrator and distributed data interface along with metadata index

The provider layer contains the actual compute and storage resources connected to the core by a set of integration components

HPC-as-a-Service approach

Traditionally, supercomputing centres impose strict rules for accessing High-Performance Computing (HPC) resources. The classic process requires familiarity with command-line tools such as SSH and gaining knowledge about complexities of HPC environments like batch scheduler, storage tiers or software modules.

In contrast, commercial cloud providers offer quick access with single sign-on, token-based authentication, and flexible, user-friendly services, often tailored for specific needs.

The LEXIS Platform modernises HPC access by adopting an HPC-as-a-Service approach, built on the HEAppE middleware. HEAppE enables users to submit jobs securely through external identity providers or the platform’s own database. Depending on the mode of integration, jobs run under a mapped HPC account associated with the user’s project.

Job execution is controlled through pre-defined script templates, output logs are kept at each site. For convenience, only essential parameters need to be set by the user, often directly via the LEXIS Portal or an API call.

Integrating HPC and Cloud systems

The LEXIS Platform can use both HPC clusters and Cloud environments (e.g. OpenStack, Kubernetes) as backends. This reflects today’s reality in European supercomputing centres, where HPC and Cloud infrastructures increasingly complement each other.

HPC clusters provide maximum performance with bare-metal hardware and specialised software stacks.

Cloud platforms offer flexibility and customisation at the operating-system level.

Through HEAppE, LEXIS can run batch jobs, or launch containers in both environments. It also supports scenarios where containers run directly on HPC systems.

Managing complex HPC/Cloud workflows

The LEXIS Orchestrator handles complex workflows that combine HPC, Cloud, Quantum and AI resources with automated data transfers using a custom provider library for Apache Airflow.

Workflow orchestration

Most real-world workflows involve multiple steps, represented as a directed acyclic graph (DAG). LEXIS executes such workflows across both HPC schedulers and Cloud systems.

This is powered by Apache Airflow, extended with adaptors to HEAppE for HPC job submission and distributed data interface to enable seamless data staging.

LEXIS also offers a declarative workflow specification based on YAML, see LEXIS Workflow Definition.

Identity, access, and security

The LEXIS management core ensures secure identity and access management. It supports:

single sign-on based on OIDC and Keycloak,

delegated authentication with European federated identities (MyAccessID and EUDAT B2ACCESS),

role-based access control - RBAC.

Security follows a “zero trust” and “security by design” approach. The modular architecture relies on REST APIs, OAuth 2.0, OpenID Connect and custom RBAC management.

Automated data management

The Distributed Data Infrastructure (DDI) provides unified way for managing data across distributed resources. It enables automatic data transfers between locations during workflows and provides easy user access through the Portal and the APIs. It leverages centralised metadata index and unified API for data transfers based on iRODS and its HTTP API.

See details here: Distributed Data Infrastructure.

Data upload and download

Users can:

upload and download data via resumable HTTPS (Portal),

transfer data directly via iRODS.

Once indexed in the DDI, data is accessible in the LEXIS Platform.

data is stored on each resource provider in an iRODS zone

only metadata is mirrored to a central index

data can be staged from iRODS to HPC and back

ingestion from external resources is supported

data management in web interface and CLI with Py4Lexis

This design ensures seamless integration with various HPC providers, conforming to the resource allocation policies and access management.

FAIR data and EUDAT integration

LEXIS supports FAIR principles (Findable, Accessible, Interoperable, Reusable) by enforcing DataCite 4.5 standard for published datasets. LEXIS also provides convenient access methods for easy data download as well as unique identifiers which can be used for further referencing.

Resource allocation and accounting

The LEXIS Platform adapts to the resource allocation and accounting models of connected resources providers.

Each LEXIS project groups users and resource allocations. Projects are strictly isolated from one another, access is granted only per user request and acceptance by the project owner.

More information: Resource management and accounting.