Staging, compression and encryption service

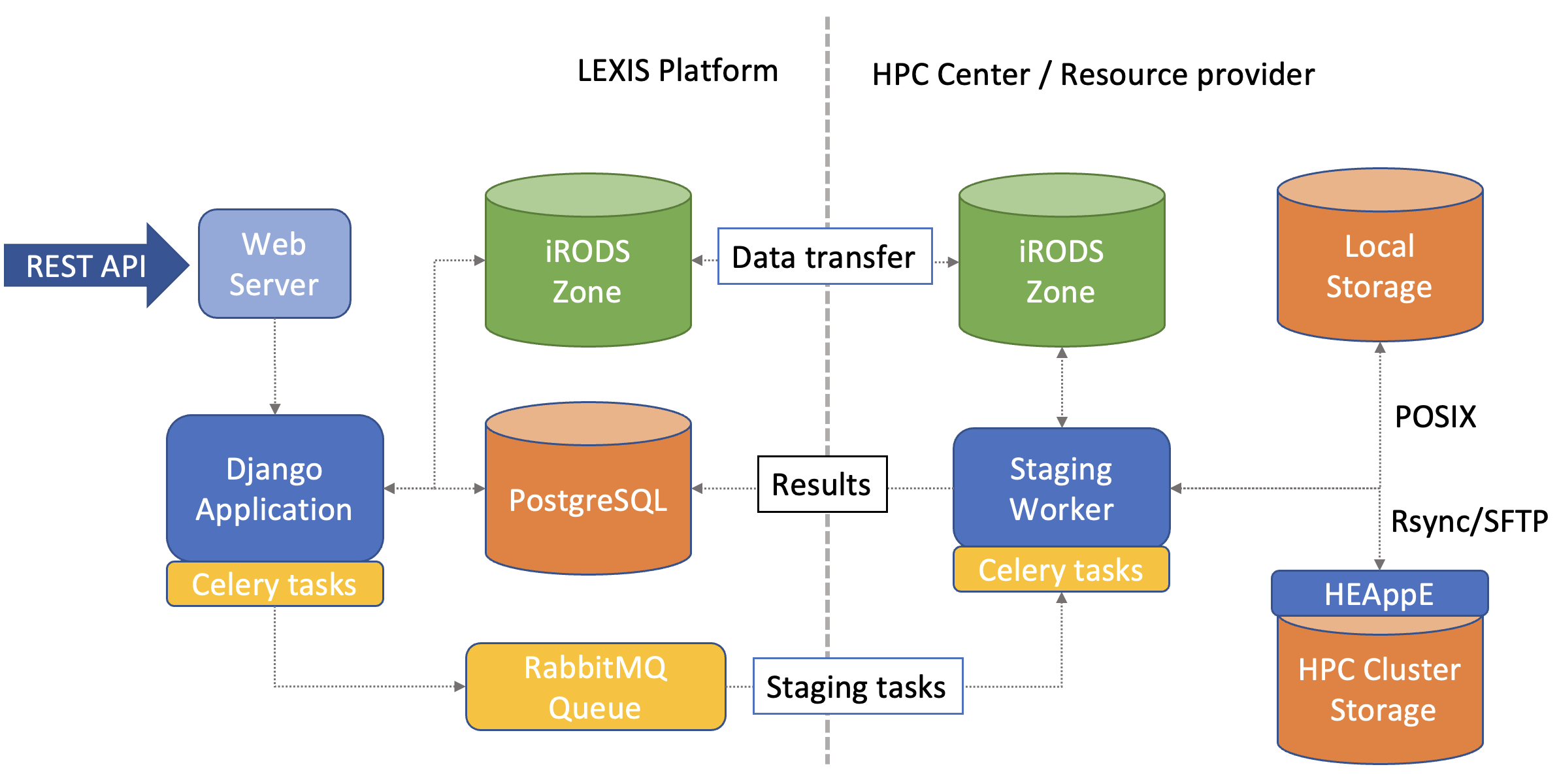

In LEXIS, the orchestrator managing the workflows triggers data movement between the different components of the LEXIS Data Infrastructure. That includes moving and deleting input, intermediate files, and output. For this purpose, the staging REST API was created. It provides endpoints for the orchestrator to trigger the data movement, copying or deletion operations. On to of that, the API also has operations for data compression and encryption. It further supports the combination of compression with encryption and decompression with decryption with one task.

Performing such operations on large data takes time, which is unsuitable for short HTTP requests for REST APIs. Thus, an asynchronous solution for execution of requests was needed. Therefore we use a Distributed Task Queue connected to a RabbitMQ broker in the backend. A data management task is pushed to the queue once a request is triggered on the Staging API. Each task is assigned a unique ID.

This ID can be used to track the status of the request via the API. As a REST framework, Django was chosen because it offers simplicity, flexibility, reliability, and scalability. The Django framework is connected to a database, for which we use PostgreSQL. For the Task Queue, the Celery framework (https://docs.celeryq.dev/en/stable/index.html) was chosen. Celery provides a simple mechanism to connect with Django and is widely used in the open source community. We deployed an instance of the widely used RabbitMQ broker. Status information is then stored in a PostgreSQL result backend.

The Celery framework allows to trigger the individual tasks in patterns such as chains or chords. This allows the platform to seamlessly perform compression, encryption and staging oeprations in single asynchronous operation according to the passed parameters.

Source code

The source code for the APIs and workers is available at: https://opencode.it4i.eu/lexis-platform/data/api.

Staging worker

The staging worker is an instance of a Celery worker that is connected to the central PostgreSQL and RabbitMQ instances and executes the actual tasks which access the data and performs the requested operations. Each location has its own unique queue and can deploy multiple workers, according to the scalability requirements. Triggering of the tasks and their submission to queues is handled in the Staging API Django application.

The staging worker supports the following data transfer protocols: - Direct POSIX access (staging area) - Rsync/SFTP for HPC clusters - iRODS zones/federation - S3/Swift object storages (planned) - Relational and document databases (planned)

Encryption and compression workers

These workers are implemented in the same way as the staging woker - as a Celery process, allowing the same scaling capability. The compression worker leverages parallel gzip implementation, which in combination with fast flash based storage for staging area offers fast compression or decompression of extensive datasets. Thanks to the modular nature of the Celery workers, more compression methods can be added.

The encryption worker handles symmetric encryption of the datasets using OpenSSL and hardware accelerated (where available) AES-256 algorithm. The keys are handled automatically within the platform for each encryption and decryption operations.

Data transfer API

The data transfer API handles the upload and download of the datasets in the LEXIS Platform including their metadata. This API is used for chunked HTTPS-based uploed implemented by the TUS protocol and HTTPS based download of the datasets contents. It leverages the staging and metadata APIs for its operation.