About LEXIS Platform

Welcome to the LEXIS Platform documentation. This page gives an overview of the LEXIS Platform, its architecture and components.

The public endpoints for the platform services:

https://portal.lexis.tech – LEXIS Platform Portal

https://api.lexis.tech – public LEXIS Platform APIs

https://aai.lexis.tech/auth – authentication services endpoint

Overview and architecture

The LEXIS platform enables easy access to distributed High-Performance-Computing (HPC) and Cloud-Computing infrastructure for science, SMEs and industry. It enables automatised workflows across Cloud and HPC systems, on site or across different European supercomputing centres.

Below, we show the main features, concepts and components of LEXIS:

Platform architecture for easy access and automatised data-driven workflows

HPC-as-a-Service approach

Embedding of HPC and Cloud-Computing systems

LEXIS Platform Core vs. LEXIS Platform Node features

Management of complex, mixed Cloud-Computing/HPC workflows

Workflow orchestration

Platform Management Core - user identity and access management

Automated big data management within workflows

Data upload and download

Distributed Data Infrastructure, staging and connectivity

EUDAT features, FAIR data

Data safety and security

The last sections of this page lay out the platform concept as seen by the user, with pointers to our actual user interfaces.

Platform architecture for easy access and automatised data-driven workflows

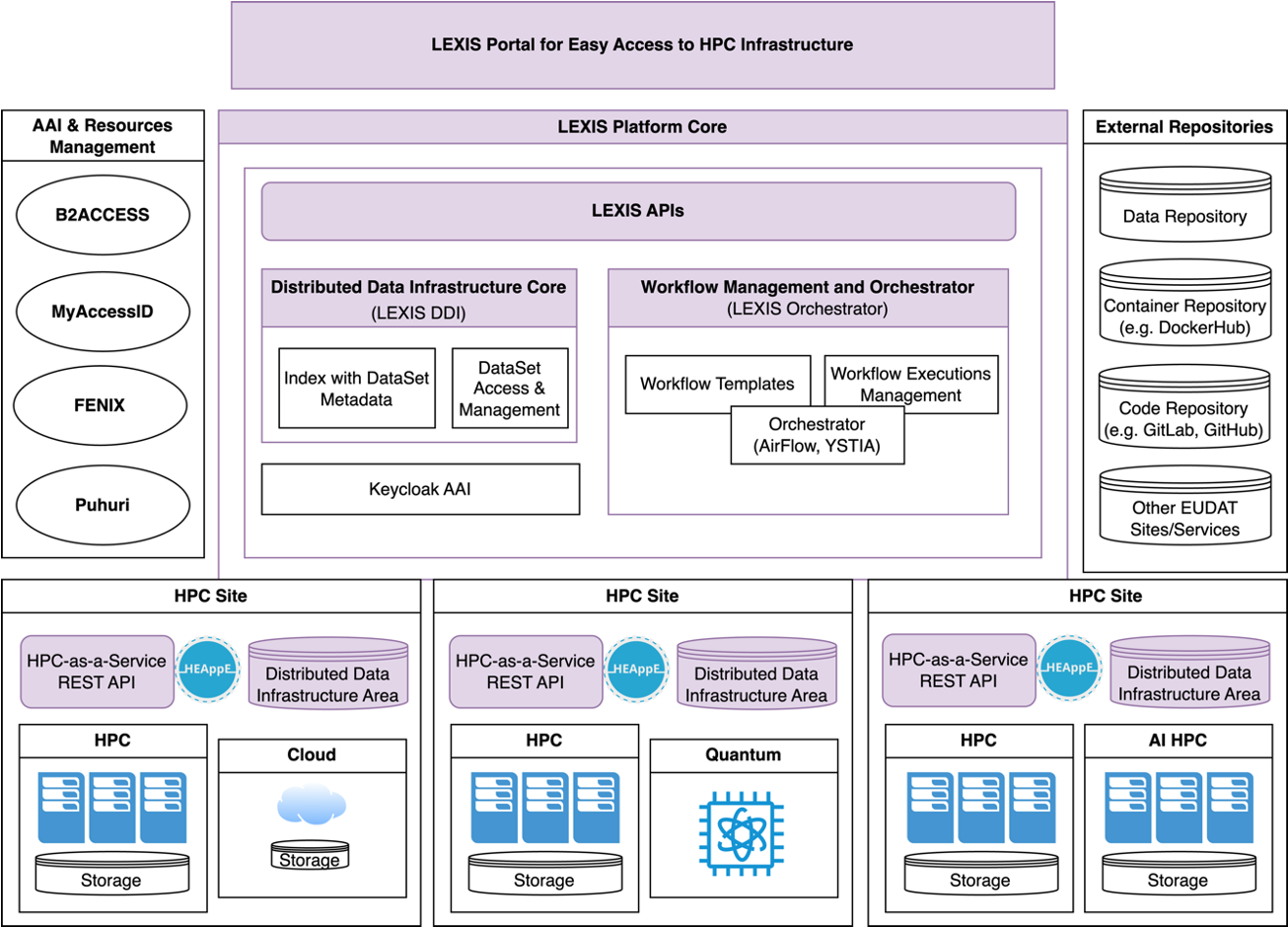

An overview of the LEXIS Platform architecture is shown in this figure:

The LEXIS Platform federates Supercomputing centres (HPC Sites, lower part of figure) and makes them accessible via a platform core (upper, central part) managing data and workflows. A REST-API based architecture connects this to the LEXIS Portal (topmost part). For low-threshold integration into the European HPC and Cloud-Computing landscape, several identity providers / management systems (upper/left part) can be used with LEXIS. Data are easily exchanged with relevant repositories and with the EUDAT collaborative data infrastructure.

HPC-as-a-Service approach

Supercomputing centres have strict policies on the usage of resources, in particular of HPC machines. A classic approach is to submit an application for computing time. After this lengthy process, a personalised user account is created and access is granted usually through SSH-terminal-based login.

This approach is challenged by popular Cloud-Computing providers, who provide platforms with easy single-sign-on, token-based authentication and user-friendly, flexible approaches to computing.

In the LEXIS Platform, we count on a HPC-as-a-Service approach based on the HEAppE solution. The HEAppE middleware allows for a submission of HPC jobs based on flexible authentication against external identity providers or an internal user database. Depending on the computing facility, jobs are then executed under a mapped “classical” HPC-system account (usually a functional account with access to an appropriate computing-time grant the user is eligible to). Job execution is logged where needed. It can be controlled by job-submission script templates, where, depending on use case, only essential parameters can be modified. These can be exposed via the LEXIS portal for convenient setting by the user.

Embedding of HPC and Cloud-Computing systems

The LEXIS platform can address HPC clusters as backends as well as Cloud-Computing (e.g. OpenStack, Kubernetes) platforms and more. This reflects the fact that European Supercomputing centres nowadays often complement their managed HPC clusters with Cloud-Computing facilities.

HPC clusters thus leverage efficient jobs execution directly on bare-metal hardware with a specialised software stack, while the cloud environments offer full flexibility and customised software stacks from the operating-system level on. The LEXIS Platform, with help of HEAppE, can start jobs and virtual machines or containers on both kinds of environments. It is also well prepared for a convergence scenario in which containers may be executed on HPC systems.

LEXIS Platform Core vs. LEXIS Platform Node features

The LEXIS Platform offers some stable, centralised core services, such as the data catalogue, the identity and access management, and the workflow management. Thus, e.g. at IT4Innovations, the full platform software stack is deployed, while integrating with the platform is a much lower-threshold action: We have prepared lightweight installation bundles for centres interested to join the platform. This deploys in particular HEAppE and a subset of Distributed Data Infrastructure components.

Management of complex, mixed Cloud-Computing/HPC workflows

LEXIS manages and orchestrates versatile workflows utilising various backend systems of its Distributed Computing Infrastructure (DCI).

Workflow orchestration

Complex workflow execution is usually not just a simple single command execution, but a more complicated set of tasks that together create a workflow. These tasks can in most cases be described by a directed acyclic graph (DAG). Workflows represented as DAG can be then executed on computational infrastructure. An important requirement for us is the possibility to orchestrate tasks on HPC batch scheduling systems and also on Cloud schedulers. These functionalities are implemented via Apache Airflow, with respective adaptors to HEAppE for HPC task execution.

Platform management core - user-identity/access management and security

A secure and versatile identity and access management, considering the roles of users in projects and organisations is implemented in the Platform’s management core. Already pilot use cases of the platform dealt with sensitive data, and the participating computing centres require a high level of security and identity assurance.

While LEXIS provides a single sign-on through the platform’s IAM, delegated authentication and identity federation is preferred through European cross-site identities. Integration with Puhuri, MyAccessID, FENIX and B2ACCESS provides ample compatibility and possible functionality here, including the transfer of attributes on the account.

Diving deeper into security and AAI aspects, the LEXIS Platform is built based on the “zero trust” concept and “security by design” principles. The architecture is modular, with communication realised by REST APIs. Authentication and authorisation are realised using OAuth 2.0 and OpenID Connect or SAML. Access control is role-based.

Automated big data management in workflows

The LEXIS Distributed Data Infrastructure (DDI) holds input, output and intermediate data for computing on LEXIS. It is addressed by the LEXIS Portal and orchestrator for automated cross-system data movement within workflows, and for easy access to storage for uploading input data and downloading results. Up-to-date technical details on the current DDI setup, which relies on LEXIS REST APIs and EUDAT/iRODS (Integrated Rule-Oriented Data System) for data exchange, can be found here: Distributed Data Infrastructure.

Data upload and download

Data can be uploaded into the platform, and downloaded from the platform via resumable https-based uploads (through the portal), but also via direct iRODS transfers. These functionalities use the DDI in the background, which ensures appropriate, easy and conceptually sound data management as well as metadata enrichment.

Distributed Data Infrastructure, staging and connectivity

Once data is stored on the platform - i.e. on the LEXIS DDI - it is available everywhere in the LEXIS platform, across Europe. This is realised through iRODS remote file-access capabilities and the features of the LEXIS Data-Staging API, which executes queued file-transfer requests from the orchestrator or user. Besides iRODS file fetching, the Staging API uses various file-transfer mechanisms (SCP, GridFTP, etc. - as available) and file-staging-area strategies. Thus, it can easily stage data to HPC clusters with various configurations, keeping their user-isolation concept intact. All necessary backend file systems of HPC and Cloud-Computing systems within LEXIS are appropriately addressed.

The LEXIS DDI APIs also facilitate an automatised data transfer from and to public data sources and open or private remote repositories. Appropriate storage backends at the computing centres allow for keeping/caching data for re-use.

EUDAT features, FAIR data

EUDAT B2HANDLE and B2SAFE as well as iRODS internal features are used in the LEXIS DDI to handle data according to the FAIR principles (Findable, Accessible, Interoperable, Reusable). Via B2HANDLE, each dataset in the LEXIS DDI can be assigned a globally unique persistent identifier (PID), and metadata fulfilling the DataCite standard are stored alongside the data. B2SAFE preserves these principles when triggering data replication processes as well.

Data safety and security

Leveraging EUDAT-B2SAFE and LEXIS features, data on the LEXIS DDI can be replicated across LEXIS sites for excellent availability and redundant storage. An encryption and compression system controlled via REST APIs allows keeping sensitive data encrypted at rest within the DDI.

Resource allocation and accounting/billing principles

One principal feature of the LEXIS platform is adaptation to the accounting and billing rules of the infrastructure providers and integration with these providers. The LEXIS web portal can be used to request allocations (financed or on research-proposal basis) through an appropriate service (e.g. Puhuri) and to integrate granted resources. Computations through the LEXIS platform use granted resources at the participating computing centres, making them accessible within a LEXIS computational project. Such a project bundles the eligible users and a manager. Resources granted and used are tracked within the LEXIS portal, together with user identities and their role-based access rights within projects and organisations.

Further information can be found here: Resource management and accounting